Actually stopping AI scrapers from taking down my server

In my earlier post about Forgejo and scraper bots, I mentioned that adding a robots.txt file helped reduce scraping. Well, it turns out the bots only disappeared because I’d set the repository to private, and they were receiving numerous 404 errors. They weren’t actually respecting robots.txt (although Google, at least, was).

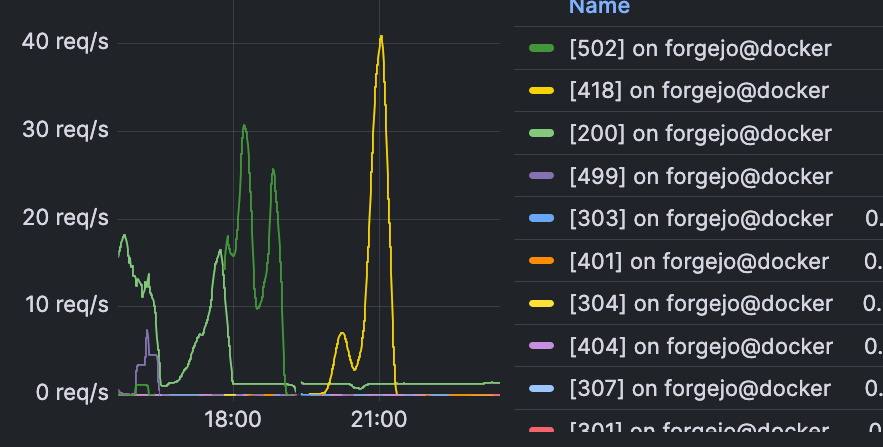

Around April 17th, they returned. I set up rate-limiting using Traefik’s built-in middleware, but this traffic wasn’t coming from just one or two IPs; it originated from seemingly two entire /16 blocks within the Alibaba ASN. I configured a Traefik plugin for rate-limiting per IP block, triggering CAPTCHAs when limits were reached. I set a relatively low limit, which initially reduced the request speed. However, this caused issues for automated tools and legitimate users, so I had to increase the rate limit once the request volume subsided enough to prevent further problems.

They returned on May 13th. I was in my exam period and had limited time for countermeasures, so I initially let it be. The next morning, while I was in an exam, the entire server went down. The bots had discovered links to auto-generated source archives and were downloading them at up to 100 Mb/s. Due to how Forgejo generates these archives, this filled up the disk at an alarming rate. Once hundreds of gigabytes of archives consumed all disk space, memory usage also spiked. The combination of disk full errors and high memory pressure took most of my services offline, and databases began failing writes.

Two hours after the server went offline, I regained access, ran the cron job to clean up the archives, and restarted it to ensure system stability. Unfortunately, I was still occupied with exams, but devising a proper mitigation strategy became my top priority.

Early on the 15th, they returned and began downloading ZIP files. They retreated when they started receiving more HTTP 499 errors than 200s. My users noticed the slowdown. This pattern repeated until the 16th, when I finally had time to implement mitigations. Early that morning, their activity became even more aggressive and it had continued throughout the day, providing a live target for testing my mitigations.

The first solution I tried was CrowdSec. I set up the local API and the Traefik bouncer. Unfortunately, the built-in lists had little effect. However, I could now ban IP blocks using their command-line tools. This, however, didn’t achieve much, as they switched IPs almost immediately and resumed their activity.

I attempted to set up CrowdSec’s log parsing and scenarios. It seemed to ban a few IPs but was unable to make even a small dent in the attack, despite parsing thousands of log lines per second with numerous ‘mitigations’ enabled.

Next, I tried go-away (GitHub). This tool appeared much more flexible and less likely to disrupt my users than Anubis, a commonly-mentioned tool for PoW checks. I moved the Traefik entrypoints to its container and used the example Forgejo configuration. Immediately, bot requests started receiving 502 errors as they were dropped, while legitimate baseline requests continued to pass through. One of my users reported being blocked; the example configuration blocked multiple ASNs entirely, including one their IP address was within, so I removed that rule. The next spike was met with challenge pages and did not make it through. No other issues with go-away have been reported by my users, and it’s nearly invisible during normal usage.

go-away supports TLS fingerprinting in its rules if HTTPS requests are passed through to it. I briefly experimented with this and successfully got it working. Unfortunately, this came at the cost of request statistics from Traefik and HTTP/3 support, so I disabled it. The author has expressed interest in exposing the module as a Go library for use as a Caddy module. I would be interested in having it as a Traefik plugin to enable integrated fingerprinting without these downsides, offering easier setup and configuration (especially across multiple services) and improved performance. However, I am already very pleased with its effectiveness.

The same cannot be said for CrowdSec; I had left it enabled in the background. Here are some issues it caused:

- It blocked my IP because usage of the Matrix client API triggered a ‘scenario’.

- It blocked my Forgejo runner’s IP, which was checking for jobs.

- It blocked my Forgejo runner when it attempted to upload a container image.

Meanwhile, it failed to detect a single actual scraper. It stopped ingesting logs early this morning, and I haven’t bothered to fix it yet.